Feature

How Europe’s far right used unlabelled AI to win votes — and now writes the rules

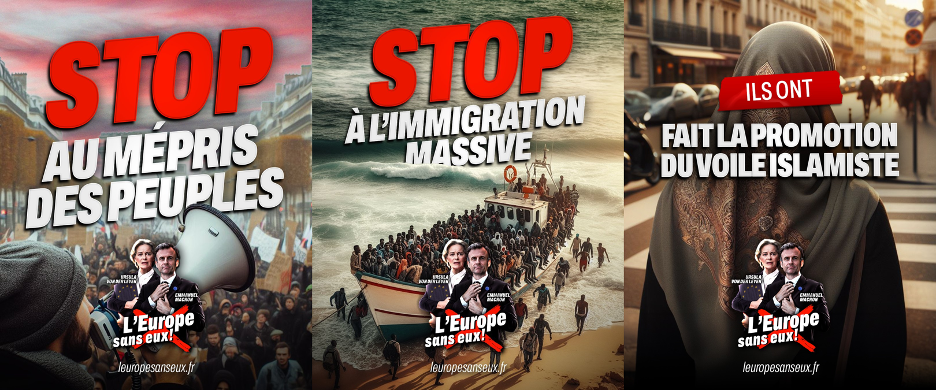

In the run-up to the 2024 European Parliament elections last June, several far-right political parties in France, Italy and Belgium used unlabelled generative-AI content to influence voters — despite having pledged to respect ethical campaigning standards.

Investigations by DFRLab, Alliance4Europe and AI Forensics identified 131 pieces of undeclared AI-generated or AI-manipulated content circulating across Instagram, Facebook, X, Telegram and Vkontakte in the weeks before the vote.

The material ranged from fabricated images and AI-enhanced visuals to shallowfakes and cheapfakes: low-cost manipulations pairing out-of-context captions with misleading imagery.

Researchers found the content was used to deepen social divisions, push conspiratorial narratives and distort public debate.

The European Commission’s own post-election report corroborated the pattern, warning that political actors had used such material to “spread misleading narratives and amplify social divisions”.

It acknowledges that civil society and fact-checkers detected over 100 instances of unlabelled generative AI content shared by political parties, particularly in France, Belgium and Italy.

Contrary to fears of sophisticated deepfakes, most manipulations were simple, inexpensive, and targeted: out-of-context captions, fabricated migration scenes, and AI-enhanced images promoting Islamophobic narratives such as the so-called “Muslim Great Replacement”.

The parties behind it

The electoral consequences are tangible.

Thirty-seven MEPs now sitting in the European Parliament were elected from parties identified as having used AI-generated or manipulated content during the campaign: 29 from Rassemblement National, eight from Lega and one from Reconquête.

Many of them now hold positions on committees directly responsible for shaping the EU’s response to disinformation, digital regulation and artificial intelligence, including LIBE, IMCO, and the parliament’s new Democracy Shield committee.

This places lawmakers elected through tactics the EU itself describes as dangerous to electoral integrity in charge of drafting the very rules intended to prevent those abuses.

The EU finds itself in a paradoxical situation: lawmakers shaping Europe’s defence against digital manipulation who were elected using the very tools they are supposed to regulate.

The use of unlabelled AI content also violated the 2024 European Parliament Elections Code of Conduct, signed on 9 April by all political families, including the far-right group Identity and Democracy (ID).

But immediately after the elections, ID dissolved and re-formed as Patriots for Europe (PfE), bringing together RN, Lega and Reconquête — but without carrying over ID’s commitments.

The European framework contains no mechanism to sanction political actors for such breaches. As DFRLab researcher Valentin Châtelet notes, the code “can at most inform investigations under the Digital Services Act”, and even then, the DSA regulates platforms, not political parties.

This imbalance is reflected in the European Commission’s April 2024 election guidelines for online platforms, which outline steps VLOPs (very large online platforms) and VLOSEs (very large online search engines) should take to counter disinformation and AI-generated manipulation.

Every recommendation targets platforms, not the politicians who generate and disseminate misleading material.

Civil society groups argue the EU is trying to secure elections by regulating only the infrastructure, not the political actors strategically abusing it.

The findings raise a fundamental question: how can the EU credibly regulate AI and disinformation when some of the lawmakers responsible for drafting those rules owe their seats partly to the use of unlabelled generative AI?

As political campaigns become increasingly digital, researchers warn that Europe risks normalising a model in which AI-driven manipulation becomes a routine electoral tool.

Back to 2025

The AI-fuelled tactics seen in the 2024 European elections have not only persisted, but also accelerated.

In 2025, national elections across Europe have already flagged it as a problem in political communication.

For example, in Ireland, a fake broadcast announcing that a presidential candidate had withdrawn from the race circulated widely online, in the Czech Republic, analysts have detected a new wave of AI-manipulated content centred on migration, crime and even EU membership and in the Netherlands authorities and watchdogs publicly warned about the risks of AI-driven manipulation, including misleading chatbots and algorithmic bias, that could undermine informed voting decisions.

In all these cases, the EU’s response has been limited: apart from general warnings, no binding enforcement actions have been taken, and no political consequence has followed for the actors behind the manipulative content.

As of now, the only comparable case in which authorities annulled election results over disinformation remains the 2024 presidential vote in Romania.

These incidents highlight a broader European threat.

Yet the responsibility for regulating these technologies partly lies with MEPs from parties that benefited from such tactics last year. The disconnect is clear when legislators in Brussels who were elected through similar tools are shaping the rules that are meant to stop them.

Author Bio

Federica Tessari is a Brussels-based Italian journalist covering migration, security and European governance. Her reporting focuses on border mobility, asylum policy and the political dynamics shaping EU responses to global displacement.

Joana Soares is a Brussels-based Portuguese journalist writing about technology policy, privacy and digital rights. She reports on AI regulation and the political impact of emerging technologies across Europe.

Related articles

Tags

Author Bio

Federica Tessari is a Brussels-based Italian journalist covering migration, security and European governance. Her reporting focuses on border mobility, asylum policy and the political dynamics shaping EU responses to global displacement.

Joana Soares is a Brussels-based Portuguese journalist writing about technology policy, privacy and digital rights. She reports on AI regulation and the political impact of emerging technologies across Europe.